Accelerated 2D Canvas Benchmarks

The previous parts of this trilogy have introduced Qt Canvas Painter and its novel new features. This post will focus on the accelerated 2D canvas performance aspect, demonstrating how our holistic approach to performance can be shown in benchmarks.

What's new in QML Tooling in 6.11, part 1: QML Language Server (qmlls)

The latest Qt release, Qt 6.11, is just around the corner. This short blog post series presents the new features that QML tooling brings in Qt 6.11, starting with qmlls in this part 1. Parts 2 and 3 will present newly added qmllint warnings since the last blog post on QML tooling and context property configuration support for QML Tooling.

Qt Gradle Plugin 1.4 is released

Qt Gradle Plugin 1.4 (QtGP) is now "at your service, M'am!" A tool that is used by Qt Tools for Android Studio and Qt Quick for Android projects like the Qt Quick for Android API examples. It is available for you via Maven Central like any Gradle plugin. If you missed QtGP version 1.3, check out the previous blog post. Now, let’s dive into what’s new in 1.4!

Qt Extension 1.12.0 for VS Code Released

Qt Creator 19 RC released

We are happy to announce the release of Qt Creator 19 RC.

New in Qt 6.11: QRangeModel updates and QRangeModelAdapter

When introducing QRangeModel for Qt 6.10 I wrote that we'd try to tackle some limitations in future releases. In Qt 611, QRangeModel supports caching ranges like std::views::filter, and provides a customization point for reading from and writing role-data to items that are not gadgets, objects, or associative containers. The two biggest additions make it possible to safely operate on the underlying model data and structure without using QAbstractItemModel API.

Qt Widgets to Qt Quick, An Application Journey Part 3

Free QML Beginner Course: Learn QML on YouTube with Qt Academy

Want to learn QML but don't know where to start? We've made it easier. Qt Academy's QML for Beginners course is now on YouTube, as we're bringing our course videos to a platform developers already use, starting with the complete QML for Beginners playlist.

Automating Repetitive GUI Interactions in Embedded Development with Spix

Automating Repetitive GUI Interactions in Embedded Development with Spix

As Embedded Software Developers, we all know the pain: you make a code change, rebuild your project, restart the application - and then spend precious seconds repeating the same five clicks just to reach the screen you want to test. Add a login dialog on top of it, and suddenly those seconds turn into minutes. Multiply that by a hundred iterations per day, and it’s clear: this workflow is frustrating, error-prone, and a waste of valuable development time.

In this article, we’ll look at how to automate these repetitive steps using Spix, an open-source tool for GUI automation in Qt/QML applications. We’ll cover setup, usage scenarios, and how Spix can be integrated into your workflow to save hours of clicking, typing, and waiting.

The Problem: Click Fatigue in GUI Testing

Imagine this:

- You start your application.

- The login screen appears.

- You enter your username and password.

- You click "Login".

- Only then do you finally reach the UI where you can verify whether your code changes worked.

This is fine the first few times - but if you’re doing it 100+ times a day, it becomes a serious bottleneck. While features like hot reload can help in some cases, they aren’t always applicable - especially when structural changes are involved or when you must work with "real" production data.

So, what’s the alternative?

The Solution: Automating GUI Input with Spix

Spix allows you to control your Qt/QML applications programmatically. Using scripts (typically Python), you can automatically:

- Insert text into input fields

- Click buttons

- Wait for UI elements to appear

- Take and compare screenshots

This means you can automate login steps, set up UI states consistently, and even extend your CI pipeline with visual testing. Unlike manual hot reload tweaks or hardcoding start screens, Spix provides an external, scriptable solution without altering your application logic.

Setting up Spix in Your Project

Getting Spix integrated requires a few straightforward steps:

1. Add Spix as a dependency

- Typically done via a Git submodule into your project’s third-party folder.

git submodule add 3rdparty/spix git@github.com:faaxm/spix.git2. Register Spix in CMake

- Update your

CMakeLists.txtwith afind_package(Spix REQUIRED)call. - Because of CMake quirks, you may also need to manually specify the path to Spix’s CMake modules.

LIST(APPEND CMAKE_MODULE_PATH /home/christoph/KDAB/spix/cmake/modules)

find_package(Spix REQUIRED)3. Link against Spix

- Add

Spixto yourtarget_link_librariescall.

target_link_libraries(myApp

PRIVATE Qt6::Core

Qt6::Quick

Qt6::SerialPort

Spix::Spix

)4. Initialize Spix in your application

- Include Spix headers in

main.cpp. - Add some lines of boilerplate code:

- Include the 2 Spix Headers (AnyRPCServer for Communication and QtQmlBot)

- Start the Spix RPC server.

- Create a

Spix::QtQmlBot. - Run the test server on a specified port (e.g.

9000).

#include <Spix/AnyRpcServer.h>

#include <Spix/QtQmlBot.h>

[...]

//Start the actual Runner/Server

spix::AnyRpcServer server;

auto bot = new spix::QtQmlBot();

bot->runTestServer(server);

At this point, your application is "Spix-enabled". You can verify this by checking for the open port (e.g. localhost:9000).

Spix can be a Security Risk: Make sure to not expose Spix in any production environment, maybe only enable it for your Debug-builds.

Where Spix Shines

Once the setup is done, Spix can be used to automate repetitive tasks. Let’s look at two particularly useful examples:

1. Automating Logins with a Python Script

Instead of typing your credentials and clicking "Login" manually, you can write a simple Python script that:

- Connects to the Spix server on

localhost:9000 - Inputs text into the

userFieldandpasswordField - Clicks the "Login" button (Items marked with "Quotes" are literal That-Specific-Text-Identifiers for Spix)

import xmlrpc.client

session = xmlrpc.client.ServerProxy('http://localhost:9000')

session.inputText('mainWindow/userField', 'christoph')

session.inputText('mainWindow/passwordField', 'secret')

session.mouseClick('mainWindow/"Login"')When executed, this script takes care of the entire login flow - no typing, no clicking, no wasted time. Better yet, you can check the script into your repository, so your whole team can reuse it.

For Development, Integration in Qt-Creator can be achieved with a Custom startup executable, that also starts this python script.

In a CI environment, this approach is particularly powerful, since you can ensure every test run starts from a clean state without relying on manual navigation.

2. Screenshot Comparison

Beyond input automation, Spix also supports taking screenshots. Combined with Python libraries like OpenCV or scikit-image, this opens up interesting possibilities for testing.

Example 1: Full-screen comparison

Take a screenshot of the main window and store it first:

import xmlrpc.client

session = xmlrpc.client.ServerProxy('http://localhost:9000')

[...]

session.takeScreenshot('mainWindow', '/tmp/screenshot.png')kNow we can compare it with a reference image:

from skimage import io

from skimage.metrics import structural_similarity as ssim

screenshot1 = io.imread('/tmp/reference.png', as_gray=True)

screenshot2 = io.imread('/tmp/screenshot.png', as_gray=True)

ssim_index = ssim(screenshot1, screenshot2, data_range=screenshot1.max() - screenshot1.min())

threshold = 0.95

if ssim_index == 1.0:

print("The screenshots are a perfect match")

elif ssim_index >= threshold:

print("The screenshots are similar, similarity: " + str(ssim_index * 100) + "%")

else:

print("The screenshots are not similar at all, similarity: " + str(ssim_index * 100) + "%")This is useful for catching unexpected regressions in visual layout.

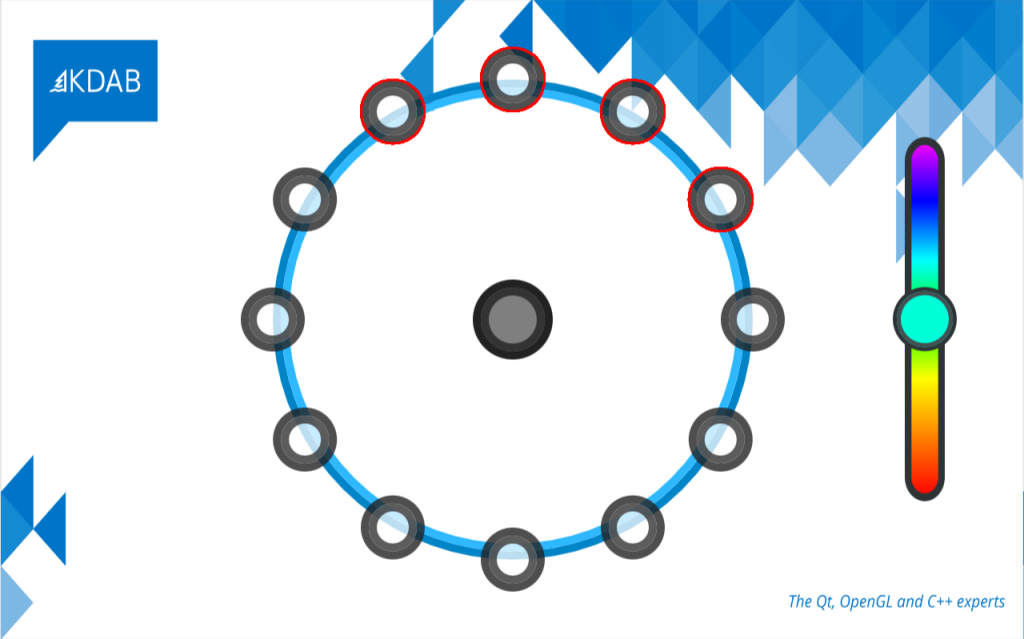

Example 2: Finding differences in the same UI

Use OpenCV to highlight pixel-level differences between two screenshots—for instance, missing or misaligned elements:

import cv2

image1 = cv2.imread('/tmp/reference.png')

image2 = cv2.imread('/tmp/screenshot.png')

diff = cv2.absdiff(image1, image2)

# Convert the difference image to grayscale

gray = cv2.cvtColor(diff, cv2.COLOR_BGR2GRAY)

# Threshold the grayscale image to get a binary image

_, thresh = cv2.threshold(gray, 30, 255, cv2.THRESH_BINARY)

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cv2.drawContours(image1, contours, -1, (0, 0, 255), 2)

cv2.imshow('Difference Image', image1)

cv2.waitKey(0)This form of visual regression testing can be integrated into your CI system. If the UI changes unintentionally, Spix can detect it and trigger an alert.

Defective Image

The script marked the defective parts of the image compared to the should-be image.

Recap

Spix is not a full-blown GUI testing framework like Squish, but it fills a useful niche for embedded developers who want to:

- Save time on repetitive input (like logins).

- Share reproducible setup scripts with colleagues.

- Perform lightweight visual regression testing in CI.

- Interact with their applications on embedded devices remotely.

While there are limitations (e.g. manual wait times, lack of deep synchronization with UI states), Spix provides a powerful and flexible way to automate everyday development tasks - without having to alter your application logic.

If you’re tired of clicking the same buttons all day, give Spix a try. It might just save you hours of time and frustration in your embedded development workflow.

The post Automating Repetitive GUI Interactions in Embedded Development with Spix appeared first on KDAB.

Qt Quick Certification Tests Published

In 2025, we launched the Qt Certification Testing Platform with the Qt Foundation certification, designed to validate essential Qt and C++ knowledge. Now, we're taking the next step by offering specialized certifications that focus on Qt's powerful Qt Quick technology.

QFuture ❤️ C++ coroutines

Ever since C++20 introduced coroutine support, I was wondering how this could integrate with Qt. Apparently I wasn’t the only one: before long, QCoro popped up. A really cool library! But it doesn’t use the existing future and promise types in Qt; instead it introduces its own types and mechanisms to support coroutines. I kept wondering why no-one just made QFuture and QPromise compatible – it would certainly be a more lightweight wrapper then.

With a recent project at work being a gargantuan mess of QFuture::then() continuations (ever tried async looping constructs with continuations only?  ) I had enough of a reason to finally sit down and implement this myself. The result: https://gitlab.com/pumphaus/qawaitablefuture.

) I had enough of a reason to finally sit down and implement this myself. The result: https://gitlab.com/pumphaus/qawaitablefuture.

Example

#include <qawaitablefuture/qawaitablefuture.h>

QFuture<QByteArray> fetchUrl(const QUrl &url)

{

QNetworkAccessManager nam;

QNetworkRequest request(url);

QNetworkReply *reply = nam.get(request);

co_await QtFuture::connect(reply, &QNetworkReply::finished);

reply->deleteLater();

if (reply->error()) {

throw std::runtime_error(reply->errorString().toStdString());

}

co_return reply->readAll();

}

It looks a lot like what you’d write with QCoro, but it all fits in a single header and uses native QFuture features to – for example – connect to a signal. It’s really just syntax sugar around QFuture::then(). Well, that, and a bit of effort to propagate cancellation and exceptions. Cancellation propagation works both ways: if you co_await a canceled QFuture, the “outer” QFuture of coroutine will be canceled as well. If you cancelChain() a suspended coroutine-backed QFuture, cancellation will be propagated into the currently awaited QFuture.

What’s especially neat: You can configure where your coroutine will be resumed with co_await continueOn(...). It supports the same arguments as QFuture::then(), so for example:

QFuture<void> SomeClass::someMember()

{

co_await QAwaitableFuture::continueOn(this);

co_await someLongRunningProcess();

// Due to continueOn(this), if "this" is destroyed during someLongRunningProcess(),

// the coroutine will be destroyed after the suspension point (-> outer QFuture will be canceled)

// and you won't access a dangling reference here.

co_return this->frobnicate();

}

QFuture<int> multithreadedProcess()

{

co_await QAwaitableFuture::continueOn(QtFuture::Launch::Async);

double result1 = co_await foo();

// resumes on a free thread in the thread pool

process(result1);

double result2 = co_await bar(result1);

// resumes on a free thread in the thread pool

double result3 = transmogrify(result2);

co_return co_await baz(result3);

}

See the docs for QFuture::then() for details.

Also, if you want to check the canceled flag or report progress, you can access the actual QPromise that’s backing the coroutine:

QFuture<int> heavyComputation()

{

QPromise<int> &promise = co_await QAwaitableFuture::promise();

promise.setProgressRange(0, 100);

double result = 0;

for (int i = 0; i < 100; ++i) {

promise.setProgressValue(i);

if (promise.isCanceled()) {

co_return result;

}

frobnicationStep(&result, i);

}

co_return result;

}

Outlook

I’m looking to upstream this. It’s too late for Qt 6.11 (already in feature freeze), but maybe 6.12? There have been some proposals for coroutine support on Qt’s Gerrit already, but none made it past the proof-of-concept stage. Hopefully this one will make it. Let’s see.

Otherwise, just use the single header from the qawaitablefuture repo. It an be included as a git submodule, or you just vendor the header as-is.

Happy hacking!

Caveat: GCC < 13

There was a nasty bug in GCC’s coroutine support: https://gcc.gnu.org/bugzilla/show_bug.cgi?id=101367 It affects all GCC versions before 13.0.0 and effectively prevents you from writing co_await foo([&] { ... }); – i.e. you cannot await an expression involving a temporary lambda. You can rewrite this out as auto f = foo([&] { ... }); co_await f; and it will work. But there’s no warning at compile time. As soon as the lambda with captures is a temporary expression inside the co_await, it will crash and burn at runtime. Fixed with GCC13+, but took me a while to figure out why things went haywire on Ubuntu 22.04 (defaults to GCC11).

Building a Custom Qt Stack on Linux

Thinking about compiling Qt on Linux? Discover why developers build it themselves, and what you should know before diving into source, modules and configuration.

REST API Development with Qt 6

This post describes an experiment using Qt 6.7’s REST APIs to explore Stripe’s payment model, and what I learned building a small desktop developer tool.

Recent Qt releases have included several conveniences for developing clients of remote REST APIs. I recently tried it out with the Stripe payments REST API to get to grips with the Qt REST API in the real world. The overloading of the term API is unhelpful, I find, but hopefully not too confusing here.

As with almost everything I try out, I created Qt desktop tooling as a developer aid to exploring the Stripe API and its behavior. Naming things is hard, but given that I want to put a “Q” in the name, googling “cute stripes” gives lots of hits about fashion, and the other too-obvious-to-say pun, I’ve pushed it to GitHub as “Qashmere“:

setAlternatingRowColors(true);

Developers using REST APIs will generally be familiar with existing tooling such as Postman and Bruno, for synthesizing calls to collections of REST APIs. Indeed, Qashmere uses the Stripe Postman JSON definition to present the collection of APIs and parameters. Such tools have scripting interfaces and state to create workflows that a client of the REST API needs to support, like “create a payment, get the id of the payment back from the REST API and then cancel the payment with the id”, or “create a payment, get the id of the payment back from the REST API and then confirm it by id with a given credit card”.

So why create Qashmere? In addition to REST APIs, Stripe maintains objects which change state over time. The objects remain at REST until acted on by an external force, and when such an action happens a notification is sent to clients about those state changes, giving them a chance to react. I wanted to be able to collect the REST requests/responses and the notified events and present them as they relate to the Stripe objects. Postman doesn’t know about events or about Stripe objects in particular, except that it is possible to write a script in Postman to extract the object which is part of a JSON payload. Postman also doesn’t know that if a Payment Intent is created, there are a subset of next steps which could be in a workflow, such as cancel, capture or confirm payment etc.

Something that I discovered in the course of trying this out is that when I confirm a Payment Intent, a new Charge object is created and sent to me with the event notification system. Experimental experiences like that help build intuition.

Stripe operates with real money, but it also provides for sandboxes where synthetic payments, customers etc can be created and processed with synthetic payment methods and cards. As Qashmere is only useful as a developer tool or learning aid, it only works with Stripe sandboxes.

Events from Stripe are sent to pre-configured web servers owned by the client. The web servers need to have a public IP address, which is obviously not appropriate for a desktop application. A WebSocket API would be more suitable and indeed the stripe cli tool uses a WebSocket to receive events, but the WebSocket protocol is not documented or stable. Luckily the stripe cli tool can be used to relay events to another HTTP server, so Qashmere runs a QHttpServer for that purpose.

Implementation with Qt REST API

The QRestReply wraps a QNetworkReply pointer and provides convenience API for accessing the HTTP return code and for creating a QJsonDocument from the body of the response. It must be created manually if using QNetworkAccessManager directly. However the new QRestAccessManager wraps a QNetworkAccessManager pointer, again to provide convenience APIs and overloads for making requests that are needed in REST APIs (though some less common verbs like OPTIONS and TRACE are not built-in). The QRestAccessManager has conveniences like overloads that provide a way to supply callbacks which already take the QRestReply wrapper object as a parameter. If using a QJsonDocument request overload, the “application/json” Content-Type is automatically set in the header.

One of the inconveniences of QRestAccessManager is that in Qashmere I use an external definition of the REST API from the Postman definition which includes the HTTP method. Because the QRestAccessManager provides strongly typed API for making requests I need to do something like:

if (method == "POST") {

rest.post(request, requestData, this, replyHandler);

} else if (method == "GET") {

rest.get(request, this, replyHandler);

} else if (method == "DELETE") {

rest.deleteResource(request, this, replyHandler);

}

There is a

sendCustomRequest class API which can be used with a string, but it does not have an overload for QJsonDocument, so the convenience of having the Content-Type header set is lost. This may be an oversight in the QRestAccessManager API.

Another missing feature is URL parameter interpolation. Many REST APIs are described as something like /v1/object/:object_id/cancel, and it would be convenient to have a safe way to interpolate the parameters into the URL, such as:

QUrl result = QRestAccessManager::interpolatePathParameters(

"/v1/accounts/:account_id/object/:object_id/cancel", {

{"account_id", "acc_1234"},

{"object_id", "obj_5678"}

}

);

This is needed to avoid bugs such as a user-supplied parameter containing a slash for example.

Coding Con Currency

In recent years I’ve been writing and reading more Typescript/Angular code which consumes REST services, and less C++. I’ve enjoyed the way

Promises work in that environment, allowing sequences of REST requests, for example, to be easy to write and read. A test of a pseudo API could await on requests to complete and invoke the next one with something like:

requestFactory.setBaseURL("http://some_service.com");

async testWorkflow(username: string, password: string) {

const loginRequest = requestFactory.makeRequest("/login");

const loginRequestData = new Map();

loginRequestData.setParam("username", username);

loginRequestData.setParam("password", password);

const loginResponse = await requestAPI.post(

loginRequest, loginRequestData);

const bearerToken = loginResponse.getData();

requestAPI.setBearerToken(bearerToken);

const listingRequest = requestFactory.makeRequest("/list_items");

const listingResponse = await requestAPI.get(listingRequest);

const listing = JSON.parse(listingResponse.getData());

const firstItemRequest = requestFactory.makeRequest(

"/retrieve_item/:item_id",

{

item_id: listing[0].item_id

}

);

const firstItem = await requestAPI.get(firstItemRequest);

}

The availability of

async functions and the Promise to await on make a test like this quite easy to write, and the in-application use of the API uses the same Promises, so there is little friction between application code and test code.

I wanted to see if I can recreate something like that based on the Qt networking APIs. I briefly tried using C++20 coroutines because they would allow a style closer to async/await, but the integration friction with existing Qt types was higher than I wanted for an experiment.

Using the methods in QtFuture however, we already have a way to create objects representing the response from a REST API. The result is similar to the Typescript example, but with different ergonomics, using .then instead of the async and await keywords.

struct RestRequest

{

QString method;

QString requestUrl;

QHttpHeaders headers;

QHash<QString, QString> urlParams;

QUrlQuery queryParams;

std::variant<QUrlQuery, QJsonDocument> requestData;

};

struct RestResponse

{

QJsonDocument jsonDoc;

QHttpHeaders headers;

QNetworkReply::NetworkError error;

QUrl url;

int statusCode;

};

QFuture<RestResponse> makeRequest(RestRequest restRequest)

{

auto url = interpolatePathParameters(

restRequest.requestUrl,

restRequest.urlParams);

auto request = requestFactory.createRequest(url);

auto requestBodyDoc = extractRequestContent(restRequest.requestData);

auto requestBody = requestBodyDoc.toJson(QJsonDocument::Compact);

auto reply = qRestManager.sendCustomRequest(request,

restRequest.method.toUtf8(),

requestBody,

&qnam,

[](QRestReply &) {});

return QtFuture::connect(reply, &QNetworkReply::finished).then(

[reply]() {

QRestReply restReply(reply);

auto responseDoc = restReply.readJson();

if (!responseDoc) {

throw std::runtime_error("Failed to read response");

}

RestResponse response;

response.jsonDoc = *responseDoc;

response.statusCode = restReply.httpStatus();

response.error = restReply.error();

response.headers = reply->headers();

response.url = reply->url();

return response;

}

);

}

The

QRestAccessManager API requires the creation of a dummy response function when creating a custom request because it is not really designed to be used this way. The result is an API accepting a request and returning a QFuture with the QJsonDocument content. While it is possible for a REST endpoint to return something else, we can follow the Qt philosophy of making the most expected case as easy as possible, while leaving most of the rest possible another way. This utility makes writing unit tests relatively straightforward too:

RemoteAPI remoteApi;

remoteApi.setBaseUrl(QUrl("https://dog.ceo"));

auto responseFuture = remoteApi.makeRequest(

{"GET",

"api/breed/:breed/:sub_breed/images/random",

{},

{

{"breed", "wolfhound"},

{"sub_breed", "irish"}

}});

QFutureWatcher<RestResponse> watcher;

QSignalSpy spy(&watcher, &QFutureWatcherBase::finished);

watcher.setFuture(responseFuture);

QVERIFY(spy.wait(10000));

auto jsonObject = responseFuture.result().jsonDoc.object();

QCOMPARE(jsonObject["status"], "success");

QRegularExpression regex(

R"(https://images\.dog\.ceo/breeds/wolfhound-irish/[^.]+.jpg)");

QVERIFY(regex.match(jsonObject["message"].toString()).hasMatch());

The result is quite similar to the Typescript above, but only because we can use

spy.wait. In application code, we still need to use .then with a callback, but we can additionally use .onFailed and .onCanceled instead of making multiple signal/slot connections.

With the addition of QtFuture::whenAll, it is easy to make multiple REST requests at once and react when they are all finished, so perhaps something else has been gained too, compared to a signal/slot model:

RemoteAPI remoteApi;

remoteApi.setBaseUrl(QUrl("https://dog.ceo"));

auto responseFuture = remoteApi.requestMultiple({

{

"GET",

"api/breeds/list/all",

},

{"GET",

"api/breed/:breed/:sub_breed/images/random",

{},

{{"breed", "german"}, {"sub_breed", "shepherd"}}},

{"GET",

"api/breed/:breed/:sub_breed/images/random/:num_results",

{},

{{"breed", "wolfhound"},

{"sub_breed", "irish"},

{"num_results", "3"}}},

{"GET", "api/breed/:breed/list", {}, {{"breed", "hound"}}},

});

QFutureWatcher<QList<RestResponse>> watcher;

QSignalSpy spy(&watcher, &QFutureWatcherBase::finished);

watcher.setFuture(responseFuture);

QVERIFY(spy.wait(10000));

auto four_responses = responseFuture.result();

QCOMPARE(four_responses.size(), 4);

QCOMPARE(four_responses[0].jsonDoc.object()["status"], "success");

QVERIFY(four_responses[0].jsonDoc.object()["message"].

toObject()["greyhound"].isArray());

QRegularExpression germanShepherdRegex(

R"(https://images.dog.ceo/breeds/german-shepherd/[^.]+.jpg)");

QCOMPARE(four_responses[1].jsonDoc.object()["status"], "success");

QVERIFY(germanShepherdRegex.match(

four_responses[1].jsonDoc.object()["message"].toString()).hasMatch());

QRegularExpression irishWolfhoundRegex(

R"(https://images.dog.ceo/breeds/wolfhound-irish/[^.]+.jpg)");

QCOMPARE(four_responses[2].jsonDoc.object()["status"], "success");

auto irishWolfhoundList =

four_responses[2].jsonDoc.object()["message"].toArray();

QCOMPARE(irishWolfhoundList.size(), 3);

QVERIFY(irishWolfhoundRegex.match(irishWolfhoundList[0].toString()).

hasMatch());

QVERIFY(irishWolfhoundRegex.match(irishWolfhoundList[1].toString()).

hasMatch());

QVERIFY(irishWolfhoundRegex.match(irishWolfhoundList[2].toString()).

hasMatch());

QCOMPARE(four_responses[3].jsonDoc.object()["status"], "success");

auto houndList = four_responses[3].jsonDoc.object()["message"].toArray();

QCOMPARE_GE(houndList.size(), 7);

QVERIFY(houndList.contains("afghan"));

QVERIFY(houndList.contains("basset"));

QVERIFY(houndList.contains("blood"));

QVERIFY(houndList.contains("english"));

QVERIFY(houndList.contains("ibizan"));

QVERIFY(houndList.contains("plott"));

QVERIFY(houndList.contains("walker"));

setAutoDeleteReplies(false);

I attempted to use new API additions in recent Qt 6 versions to interact with a few real-world REST services. The additions are valuable, but it seems that there are a few places where improvements might be possible. My attempt to make the API feel closer to what developers in other environments might be accustomed to had some success, but I’m not sure

QFuture is really intended to be used this way.

Do readers have any feedback? Would using QCoro improve the coroutine experience? Is it very unusual to create an application with QWidgets instead of QML these days? Should I have used PyQt and the python networking APIs?

Debugging Qt WebAssembly - DWARF

One of the most tedious tasks a developer will do is debugging a nagging bug. It's worse when it's a web app, and even worse when its a webassembly web app.

The easiest way to debug Qt Webassembly is by configuring using the -g argument, or CMAKE_BUILD_TYPE=Debug . Emscripten embeds DWARF symbols in the wasm binaries.

NOTE: Debugging wasm files with DWARF works only in the Chrome browser with the help of a browser extension.

C/C++ DevTools Support (DWARF) browser extension. If you are using Safari or Firefox, or do not want to or cannot install a browser extension, you will need to generate source maps, which I will look at in my next blog post.

DWARF debugging

You need to also enable DWARF in the browser developer tools settings, but you do not need symlinks to the source directories, as you would need to using source maps, as the binaries are embedded with the full directory path. Like magic!

Emscripten embeds DWARF symbols into the binaries built with -g by default, so re-building Qt or your application in debug mode is all you need to do.

Qt builds debug libraries by default using the optimized argument -g2, which produces less debugging info, but results in faster link times. To preserve debug symbols you need to build Qt debug using the -g or -g3 argument. Both of these do the same thing.

Using DWARF debugger

You can then step though your code as you would debugging a desktop application.

Boosting PySide with C++ models

In a recent series of blog posts, we have demonstrated that Python and Qt fit together very well. Due to its accessibility, ease-of-use and third-party ecosystem, it is really straightforward to prototype and productize applications. Still, Python has one significant disadvantage: It is not necessarily the most performant programming language.

Continue reading Boosting PySide with C++ models at basysKom GmbH.

Rust Might be the Right Replacement for C++

Up-and-coming programming language Rust is designed for memory safety without sacrificing performance.

It's Baaack! Qt Speech Returns for Qt 6.4.0 Release

The Qt Speech module was introduced in Qt 5.8.0. I made a blog post at that time (June 2017), looking at how it provided cross-platform support for text-to-speech. With the Qt 6.0.0 release, Qt Speech was one of the modules that were no longer available. In the upcoming Qt 6.4.0 release, it is again going to be part of Qt.

What's New in Qt 6.5?

Explore the latest tools and modules in the new Qt 6.5.0 LTS release.

How to Configure and Use the Qt Creator-GitLab Integration

Qt Creator IDE has a helpful but not well-documented plugin that allows you to browse and clone projects from a GitLab server.

Get Ready for C++23

Slated for release in December, C++23 will include incremental improvements, clarifications, and removal of previously deprecated features.

Mastering Qt Multithreading Without Losing Your Mind

As applications get more complex and performance expectations rise, multithreading becomes essential. In my experience, Qt provides a powerful — […]

Meet the Qt Community in Vienna – Qt Day Austria & CEE 2025

On November 13, the Qt and QML community will meet in Vienna for a full day of inspiring talks, networking and knowledge sharing.

Join developers, tech leads and companies for a day full of insights, real world experiences and community exchange.

Free to attend, but seats are limited - first come, first serve! Read on for all the details:

Qt WebEngine Custom Server Certificates

In this blog post, we’re having a look at how we added support for custom server certificates to Qt WebEngine. This way an application can talk to a server using a self-signed TLS certificate without adding it to the system-wide certificate store.

Continue reading Qt WebEngine Custom Server Certificates at basysKom GmbH.

10 Years of Qt OPC UA

Beginning of 2025 I was searching through the version history of Qt OPC UA - trying to find out when a certain issue got introduced. At some point I was curious: How long does this thing go back?! Turns out that the first git commit is dated 25th of September 2015. Which means we have been doing this for over 10 years now!

Qt’s Approach to Input Validation: Masks and Validators Explained

Learn how these powerful tools to help manage and validate user input to avoid errors and improve your app's UX.

A taxonomy of modern user interfaces

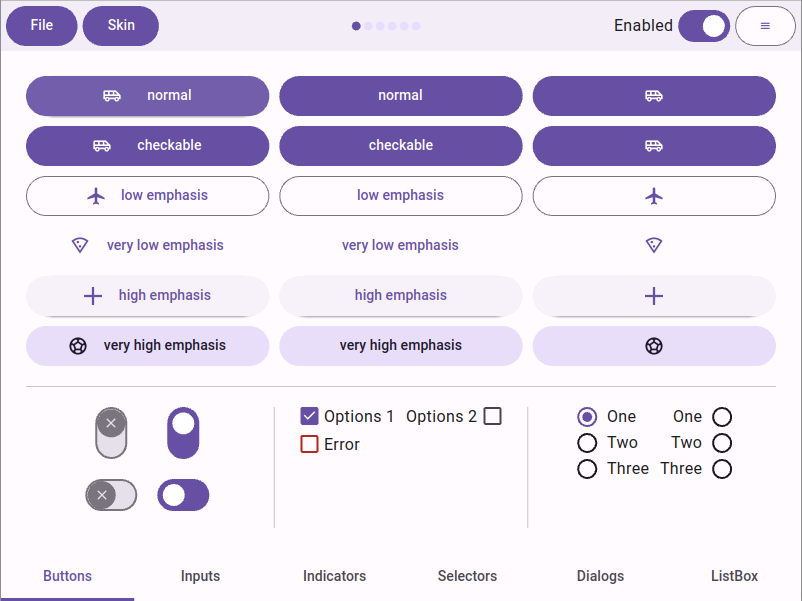

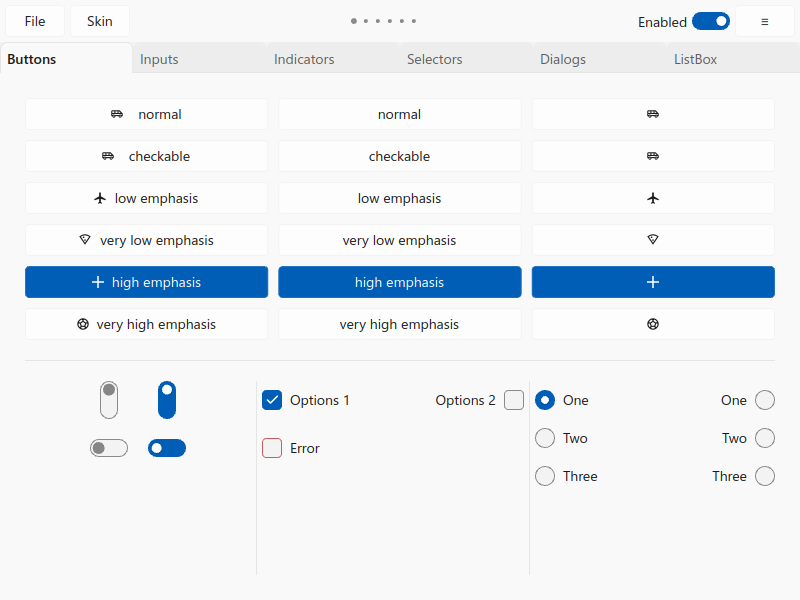

We implemented Google’s Material 3 and Microsoft’s Fluent 2 design systems for QSkinny, as shown in the screenshots below (still an ongoing effort). This required some new features to be implemented, some of which hinted at new general paradigms within many other design systems.

Contrarily, some features often found in commercial design systems seem to have been deliberately left out of these two ‘generic’ ones above.

Google’s Material 3 design system

Google’s Material 3 design system

Microsoft’s Fluent 2 design system

Microsoft’s Fluent 2 design system

Here is a subjective list of requirements for new design systems:

1. Subtle shadows everywhere

Of course the days of the 90s style thick drop shadows are gone, but apparently so is the complete flat style of the first iPhone. Shadows (and other ways of elevation) are used as a subtle way to draw attention and show levels of visual hierarchy.

For instance, a hovered or focused button will have a higher elevation than a resting button, and a dialog or a popup an even higher one. Typically these shadows have a minimal x/y offset, and a semitransparent color.

Material has a guide on elevation that lists shadows as one element, and colors as another one. Interestingly the usage of shadows in Material 3 was reduced in favor of coloring in comparison to its predecessor Material 2.

Fluent 2 similarly lists shadows and box strokes (i.e. box borders) as elements of elevation.

As a consequence, shadows should be 1st class citizens in UI toolkits: Defining a shadow on a control should be as easy as setting a color or a font.

Here is how QSkinny defines the gradient and shadow of the QskMenu class for Fluent 2:

using Q = QskMenu;

setBoxBorderColors( Q::Panel, pal.strokeColor.surface.flyout );

setGradient( Q::Panel, pal.background.flyout.defaultColor );

setShadowMetrics( Q::Panel, theme.shadow.flyout.metrics );

setShadowColor( Q::Panel, theme.shadow.flyout.color );

Fluent 2 menus in QSkinny

Side note: Inner shadows (as opposed to drop shadows) seem to be very rare and have only been observed in sophisticated controls for commercial design systems.

2. Gradients are gone… or aren’t they?

Material 3 comes with an incredibly sophisticated color/palette system (see below); but surfaces like button panels etc. consist of one color only (Sometimes two colors need to be “flattened” into one, e.g. a semi-transparent hover color and an opaque button panel color).

Same for Fluent 2: The design system lists several shades of its iconic blue color and more flavors of gray without any gradient.

However, many commercial design systems still use gradients for “2.5D-style” effects. Of course they are applied as subtly as the aforementioned shadows, but are still being used a lot.

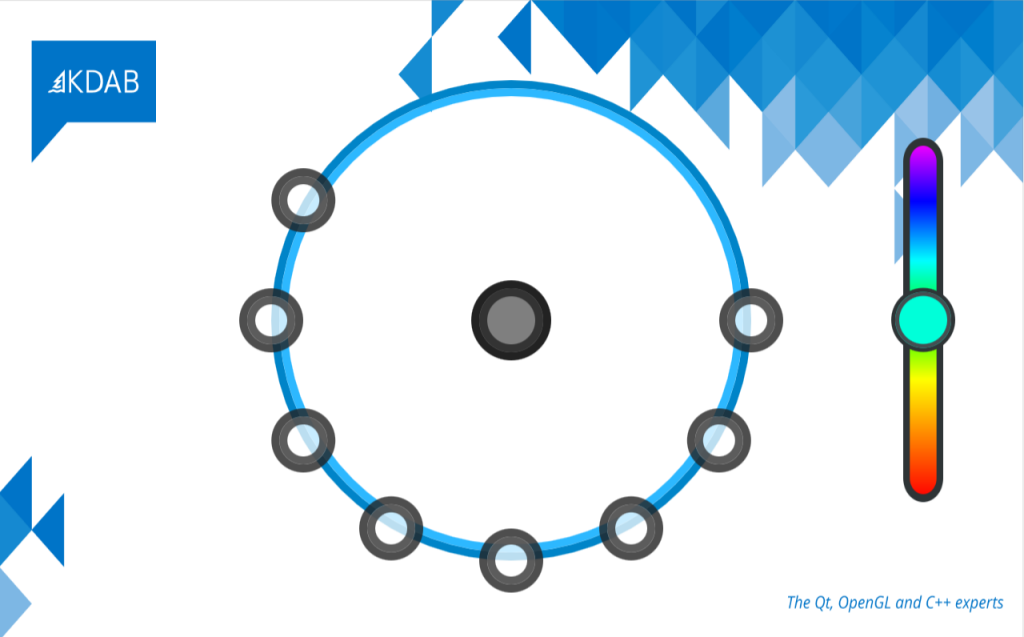

The QSkinny example below shows two small buttons on the left with subtle linear gradients (45 degrees) in the inner panel and a reversed gradient on the outer button ring. This has both an elevation and a “texture” effect without actually using textures (i.e. images). The shadows add to the depth, but even with only gradients would the sense of elevation still be there.

On the right is a gauge with conical gradients: The inner green one going from dark green to lighter green, and the outer one trying to mimic a chromatic effect. Radial gradiants are not shown here, but are equally usable.

Gradients add depth and elevation

Outlook: For more sophistication there might be the need in the future of multi-dimensional gradients like the one below: a conical gradient (from purple to blue) and a radial one (transparent to full hue):

A gauge generated by AI showing multi-dimensional gradients

3. Those modern palettes aren’t what they used to be

Google created a new color system called HCT (hue, chroma, tone) built on older ones like HSL (hue, saturation, lightness). The difference is that HCT is “perceptually accurate”, i.e. colors that feel lighter are actually recognized as lighter within HCT with a higher tone value.

By changing only the chroma (~ saturation) and tone (~ lightness) of a color, one can generate a whole palette to be used within a UI that matches the input color nicely; as an example, see the Material 3 palette on Figma.

In terms of accessibility, by choosing e.g. background and font colors with a big tone difference, one can be reasonably sure that text is comfortly legible.

So even developers without design experience can create a visibly appealing UI, without even knowing the main input color. Creating the palette depending on an input color is used on e.g. Android, where the user can choose a color or just upload an image, and all controls adapt to the main color.

Side note from the Qt world: QColor::darker() and QColor::lighter() are not fine-grained enough for this purpose.

With the additional support of changing colors dynamically (see below), this means that palettes can easily be generated for both day and night mode from one or two input colors; one could also easily change between different brand colors.

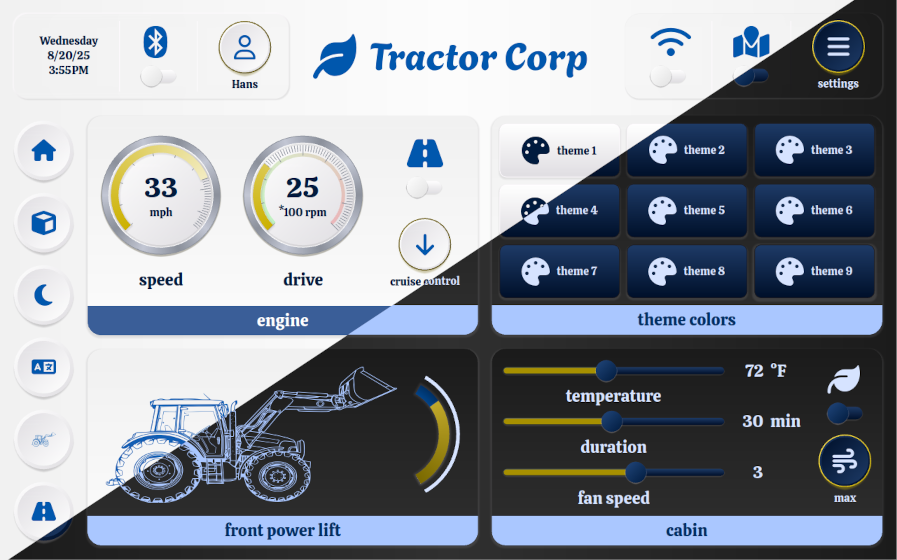

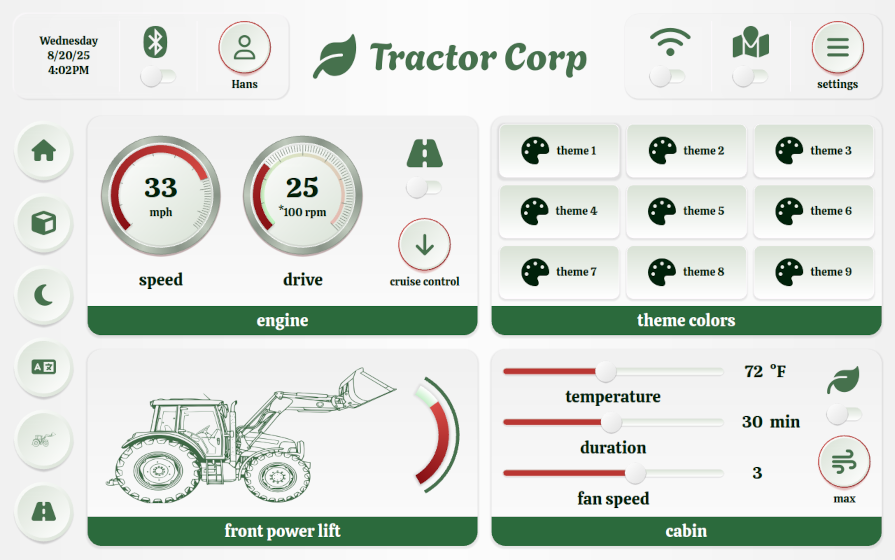

The QSkinny tractor example shows how to extend the Material 3 palette with more colors and how to change between day/night mode as well as different brands easily, as switching colors and other hints is supported directly in the framework.

QSkinny tractor example in day/night mode with “Ikea colors”

QSkinny tractor example in day/night mode with “Ikea colors”

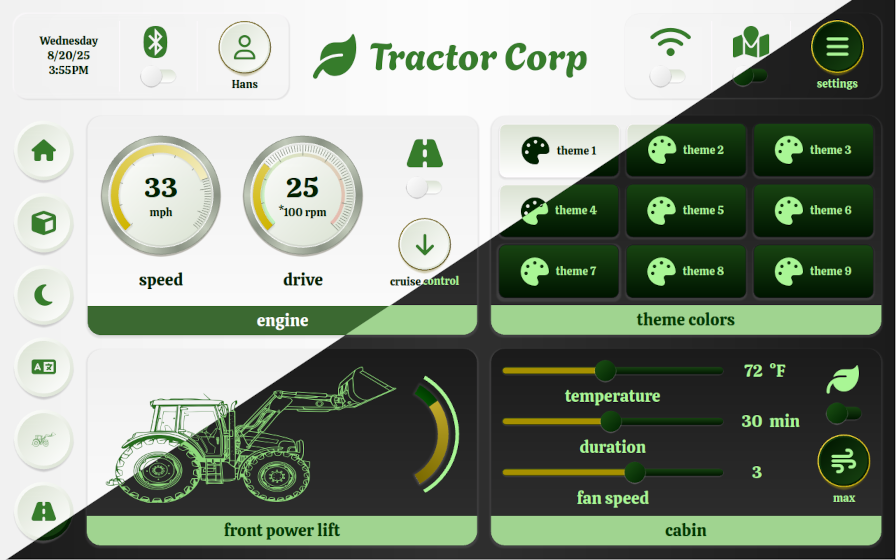

QSkinny tractor example in day/night mode with “John Deere colors”

QSkinny tractor example in day/night mode with “John Deere colors”

For an online demo of this app, see the tractor example on the main page.

So while changing between branding themes as above might not be a day-to-day use case, changing between day and night mode certainly is. This means that a UI toolkit should be able to change skin colors dynamically.

Note: Ideally not only colors can be changed, but any type of skin definition like box roundings, fonts etc. QSkinny treats every definition token, or so called “skin hint”, the same and can therefore exchange any of those at runtime.

4. Graphics: Write once, change all the time

Graphics like the button icons and the bigger tractor icon in the example above should be flexible in several ways:

They should adapt to color changes, as they are typically part of a definition that might change with the current skin; i.e. if the font and background colors change, then so need the icons.

Equally important, they should be flexible in size. With the Material 3 skin, e.g. buttons can have a different size in pixels depending on the physical size of the screen used: On smartphones that have a high resolution and a low physical size, buttons tend to have a bigger size in pixels than on tablets or TVs. This means that also the icons might have to grow with the buttons; when using layouts, they might even have to grow dynamically at runtime when resizing a window.

For the use case of different physical sizes, Material 3 supports so-called “density-independent pixels”; Fluent 2 has a similar concept named “device-independent pixels”.

These two points of course hint at using raster graphics like SVGs rather than JPGs/PNGs for icons; at least in Qt they have made kind of a revival recently.

In QSkinny flexible graphics have been supported from the start, and fit nicely with the use of icons in Material 3 and Fluent 2.

Lastly, at some point those graphics might also need to be animated with e.g. lottie. Material 3 and Fluent 2 don’t seem to have an urgent need for this, but other design systems might have this requirement.

5. Conclusion

Implementing Material 3 and Fluent 2 for QSkinny was a painful but solid way to future-proof our UI toolkit.

Modern toolkits should be following the paradigms of modern design systems: They should allow for easy definition of shadows, or generally speaking: They should allow for styling all aspects of a “box” like gradients, border colors, box shapes, and shadows.

In addition they should allow for dynamically changing all aspects of the current style like colors, fonts and the aforementioned box aspects.

Talking in QSkinny terms, we believe that styling aspects of boxes and changing skin hints dynamically is well covered after having implemented bot Material 3 and Fluent 2.

Of course there is more work to do when it comes to more advanced features for other design systems like inner shadows, multi-dimensional gradients or multiple shadow colors.

6. Quiz

Can you guess the “brand” from the colors being used in the screenshot below? Let me know and the first one to get it right will receive a drink of his/her choice next time we meet in person!

Which brand do these colors belong to? Hint: It is not really a company.

Which brand do these colors belong to? Hint: It is not really a company.